NeuroFocus XR

The project was part of my Masters Thesis at the Royal College of Art London.

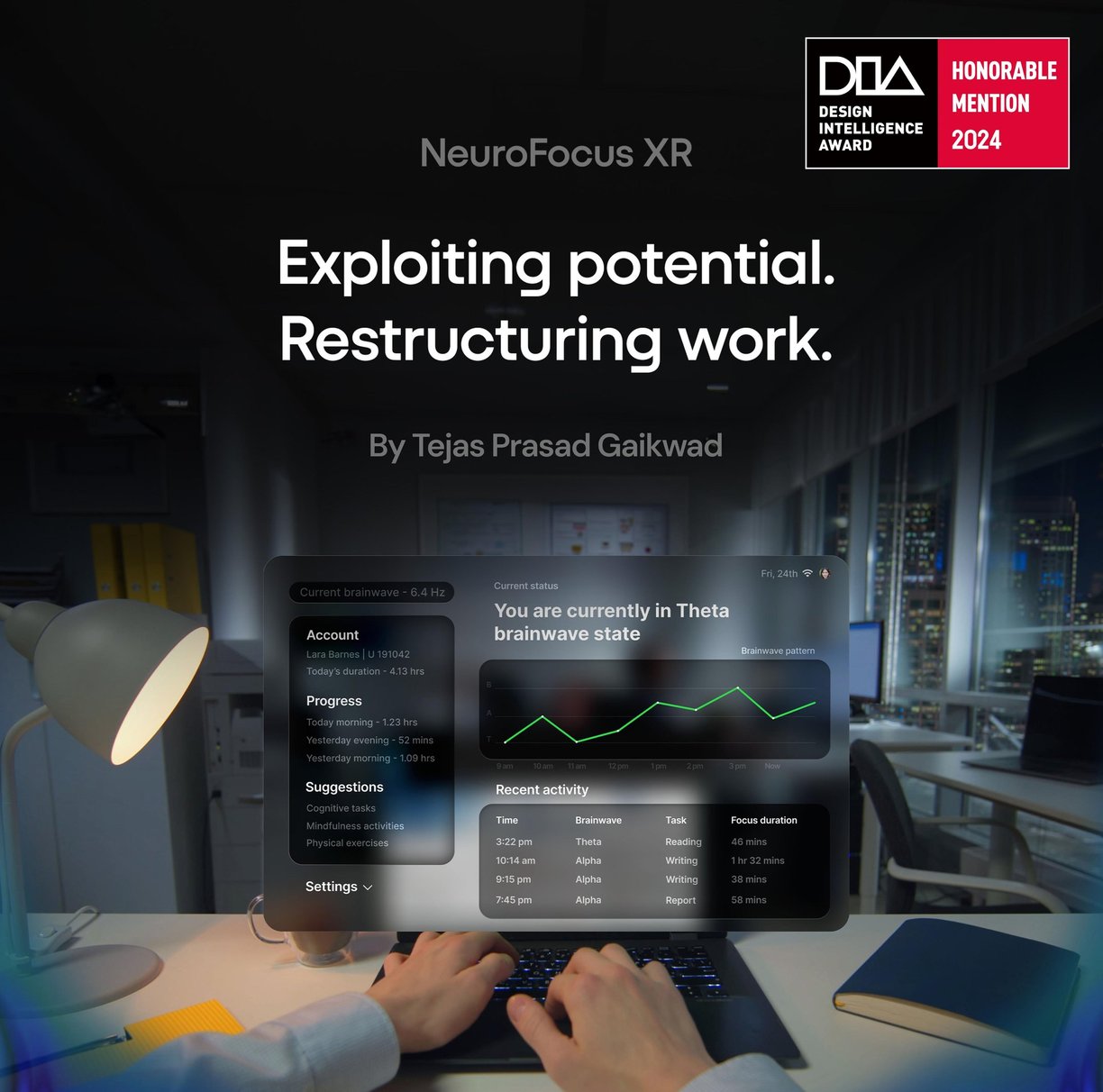

Recently won the Design Intelligence Award 2024 Honorable Mention

NeuroFocus XR is an AI-based Neurofeedback therapy for ADHD adults in Mixed Reality.

NeuroFocus XR empowers users with live brainwave feedback and visual stimuli, helping them self-regulate their emotions and actions in real-time. They can be aware of their brainwave patterns to choose tasks that are suitable for their current mental state, thus optimizing their cognitive performance and reducing stress levels.

Timeline

7th May - 24th July 2024

Domains

User Experience Design

User Interface Design

Spatial Development

Creative Technology & IOT

Human Cognition

Behavioral Therapy

Responsibilities

User Experience Research

Sketching

Prototyping

AI Integration

Visual Interaction

Mixed Reality Design

This was my Masters Thesis project guided by the Royal College of Art London MA Design Product's Senior Tutor Alex Williams.

Tools

Figma

Adobe Creative Suite

Unity

Touch Designer

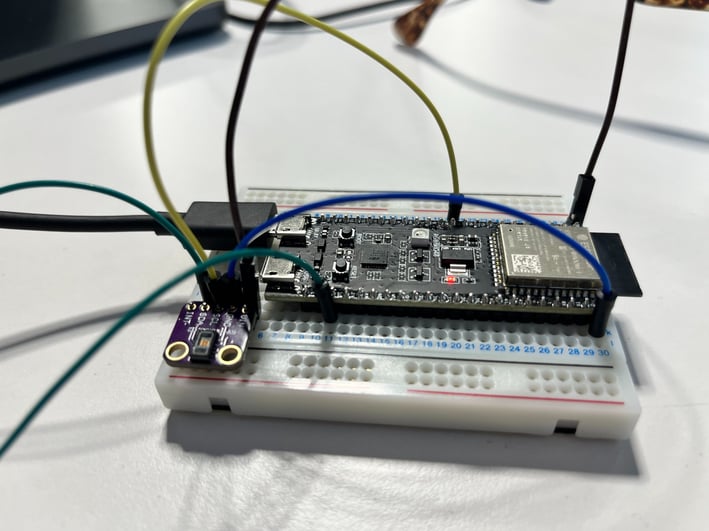

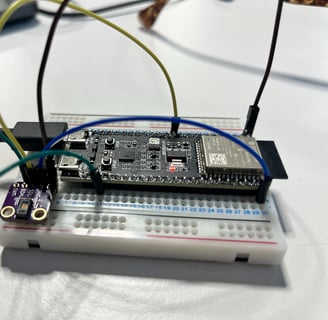

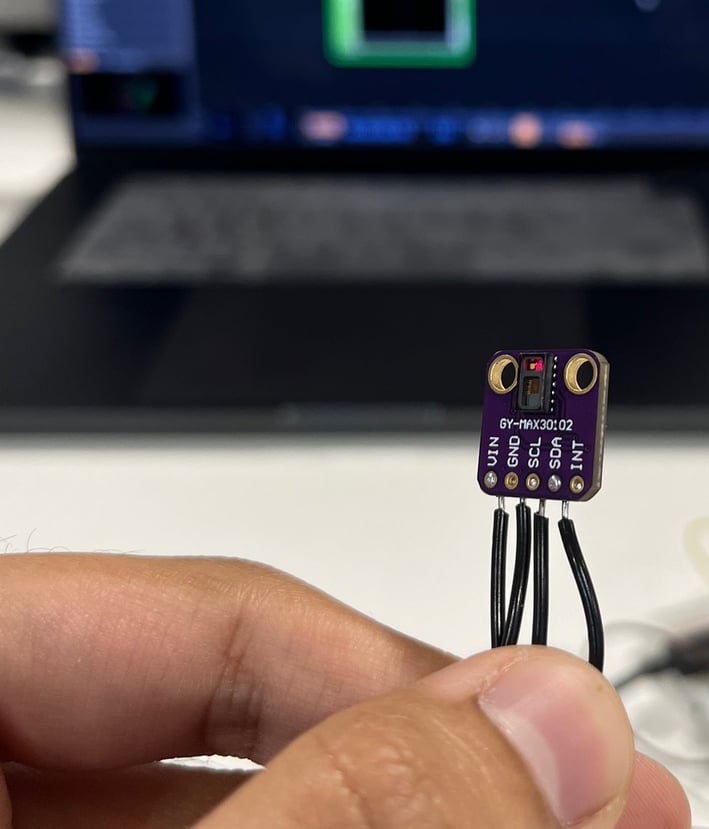

ESP-32 & Max 30102

Protokol

Research

Identifying issue

Secondary data

Understanding solutions

Expert's insights

The Process

Integration

User persona

User journey

Information

architecture

Crafting

Moodboard

High fidelity

User Interface

Visual Stimuli

Prototyping

Proof of concept

Coding internal

Components

XR prototype

Reflections

User testing

Next steps

DIA HM RCA 2024

1

2

3

4

5

ADHD is a prevalent neurological disorder that affects 5-10% of the world’s children and often persists into adulthood. These traits lead students to perform poorly in academics, and has also been observed that when carried forth to adulthood, it can lead to involvement in crimes.

ADHD symptoms & Neurofeedback therapy

ADHD Brainwaves

Cognitive-behaviour therapy

+

Medication and Cognitive-behavioral therapy (CBT) are the primary treatment options for ADHD.

Psychopharmacological treatment such as the prescription of Methylphenidate is not always effective and may have serious side effects.

Unfortunately, these primary treatment options have potential limitations, such as medication side effects, lack of behavioral improvement, high costs, and major time commitments.

Moreover, people are hesitant in accepting medication due to adverse side effects, which include the loss of appetite, anxiety, insomnia, headaches and irritability.

Long waiting lists for ADHD assessments, particularly in public healthcare systems, can significantly prolong the diagnostic process. Some individuals may have to wait months or even years to be evaluated by a specialist.

Somatic work, Meditation, Mindfulness movement are some of the initial harmless treatment options for ADHD people .

After Neurofeedback therapy, patients see themselves as improved beings in terms of focus, self-regulation of emotions, understanding of the situations, better social engagement, control in anger issues, and more.

Underlying Questions & Expert Insights

To outpack the contributing factors, I had look deeper and answer the underlying questions guided by Alex:

How do they operate?

How do they perceive their environment, things around them?

What tasks they do well? And for how long?

What’s their productivity like? Are they any different?

What if we liberate ADHD people of their weighed down medication plans?

What if we let their creativity run wild and free?

How do you make these people realize their full potential?

Work style

Mindfulness

+

Expert’s insights into tackling such a critical condition

However, existing Neurofeedback therapies are expensive, requiring long, and consistent sessions.

The games are not engaging enough, graphics are poor quality, are only appointment-based, require Neurofeedback therapist at all times throughout the therapy, and have mixed feelings among people.

New emerging ways have the potential to change the mindsets of people towards the methodologies and the technology in which they can be used.

By 'they' here I meant adults with ADHD symptoms (diagnosed or undiagnosed) who unknowingly suffer, leading to a decline in their workplace productivity.

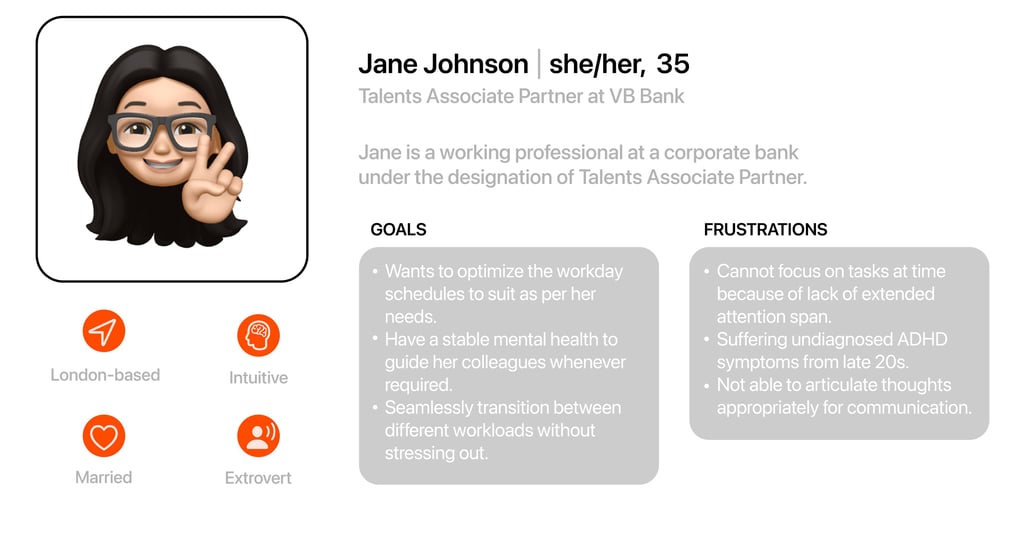

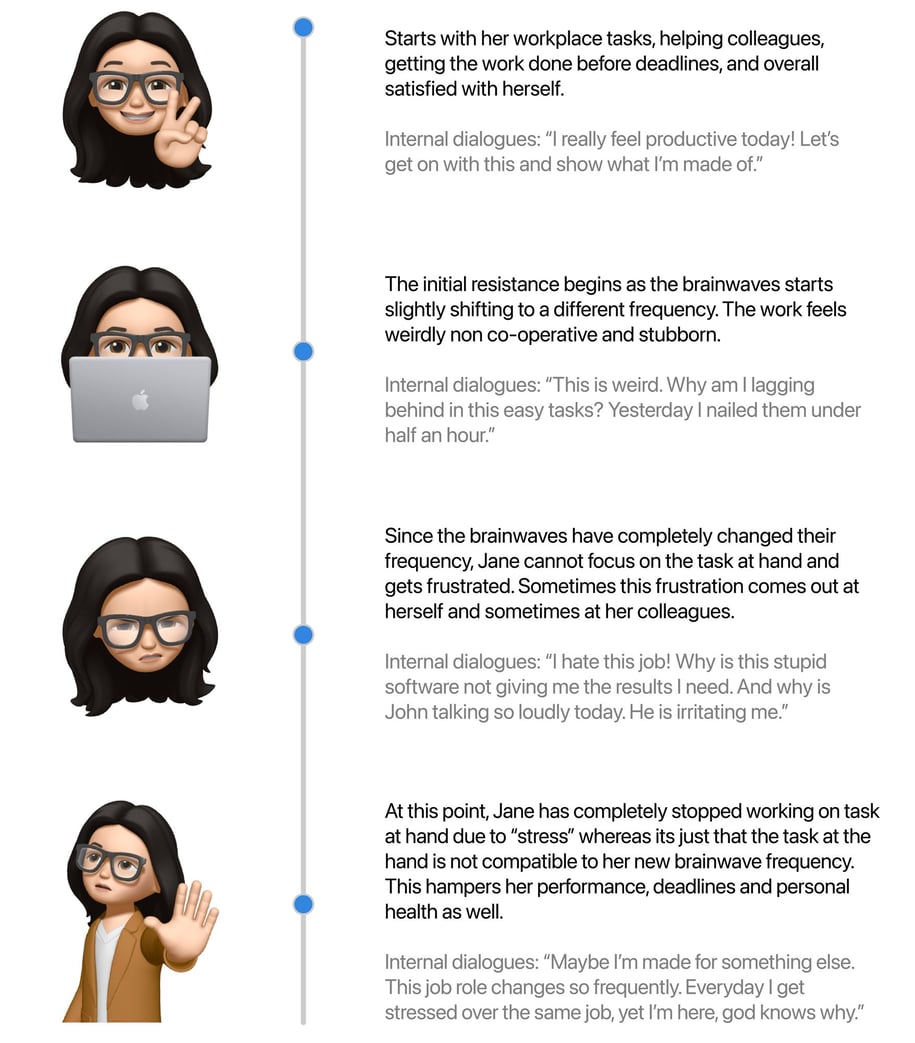

User persona

By developing a user persona I understood what would the end users really value. Since they were adults, there are a lot of different professions and creating personas for each would not have been an ideal choice. Hence, I choose a median and tried addressing most of their workplace issues. Both physical and mental.

For the user journey, I explain how and where all does she encounter problems on day-to-day basis. May it be technical, or mental issues, all of them affect our brainwave patterns and influence to change.

User journey

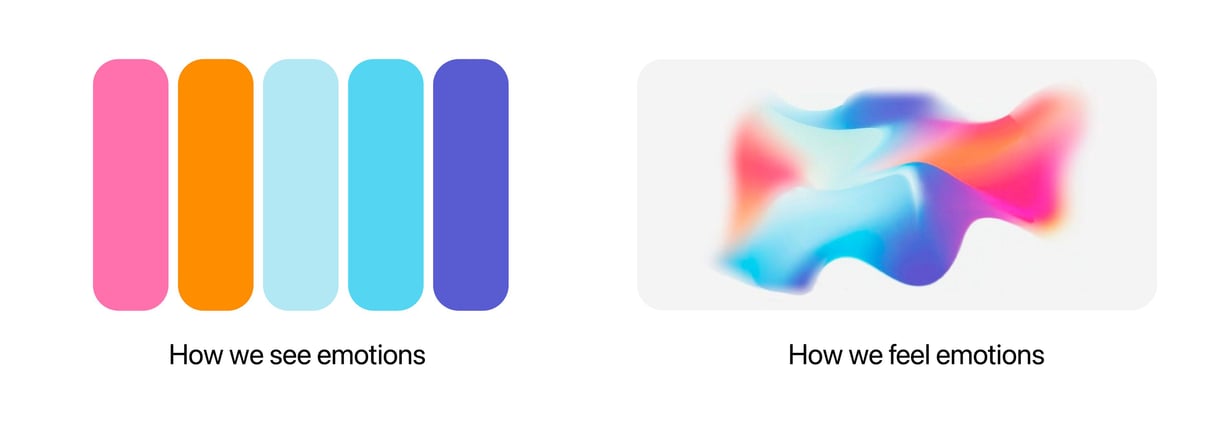

Emotions

We human beings are emotional creatures. Throughout history, we have slowly understood and categorized each of them. But what we don't understand is that's not how we feel emotions. We experience them as a continuous wave.

Here, I have categorized each emotion from Care, Joy, Guilt, Fear and Sadness. And displayed how we actually experience them.

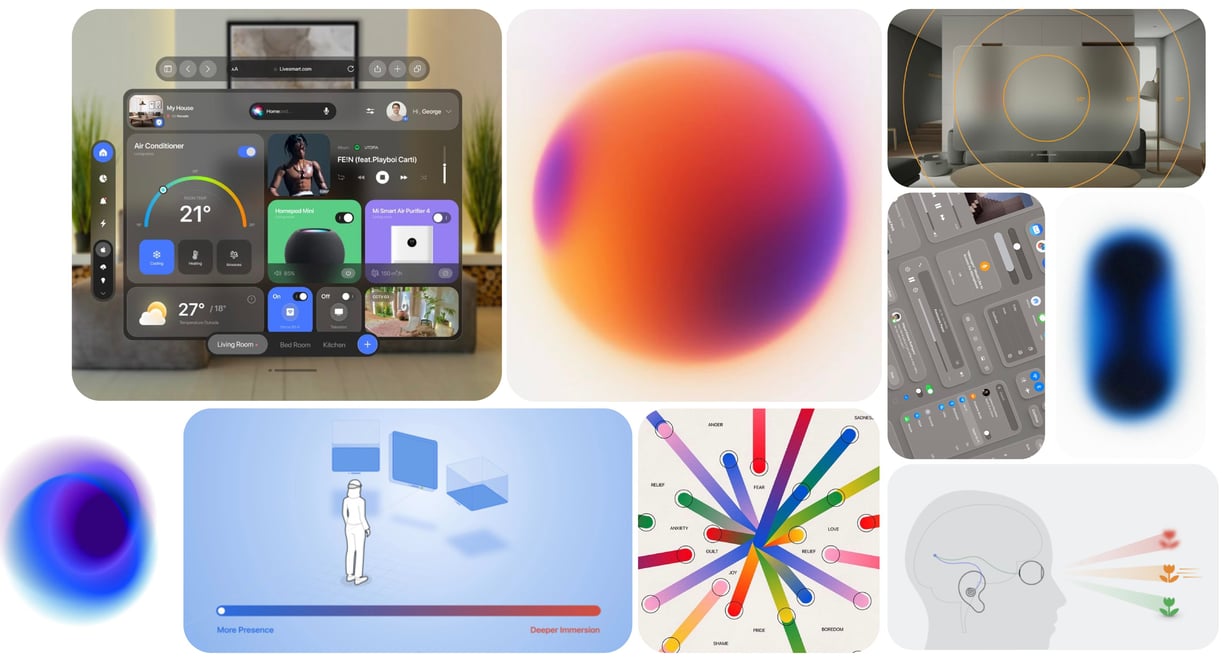

Moodboard

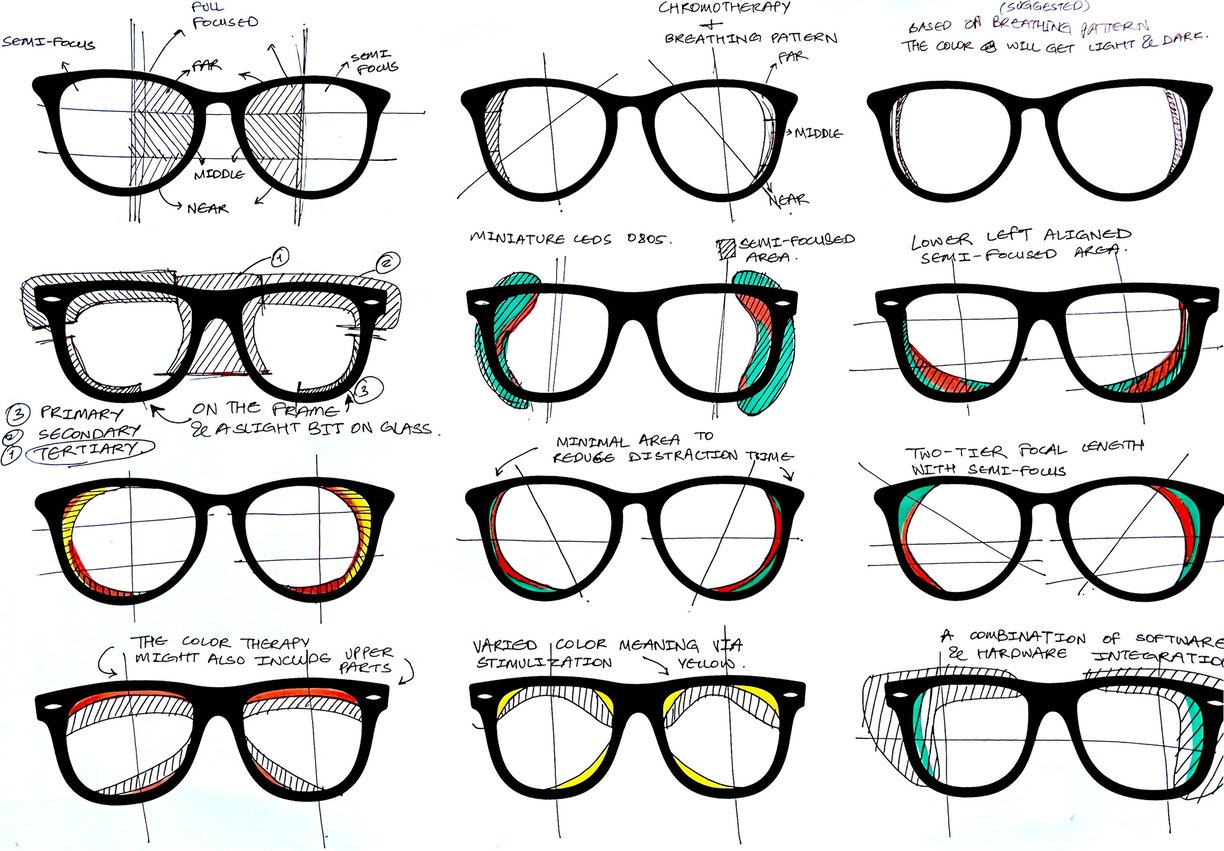

The Moodboard served the purpose of a reference to keep in mind some visual cues while designing the main platform. Since Mixed Reality is a combination of physical and digital aspects, it was tricky to find the balance, especially when dealing with brainwaves and color.

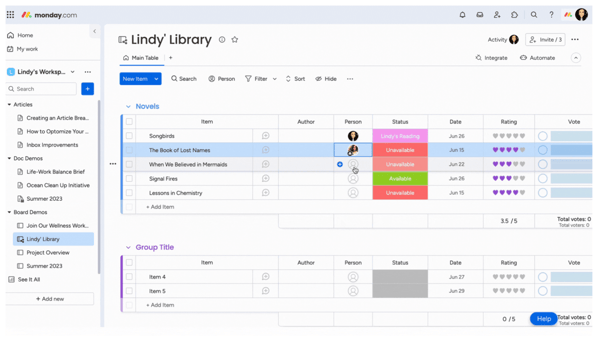

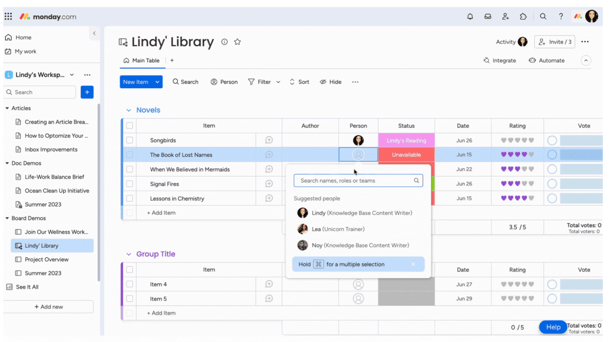

High Fidelity

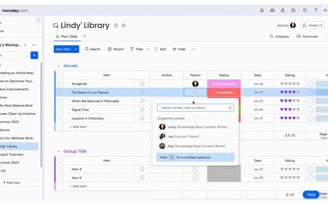

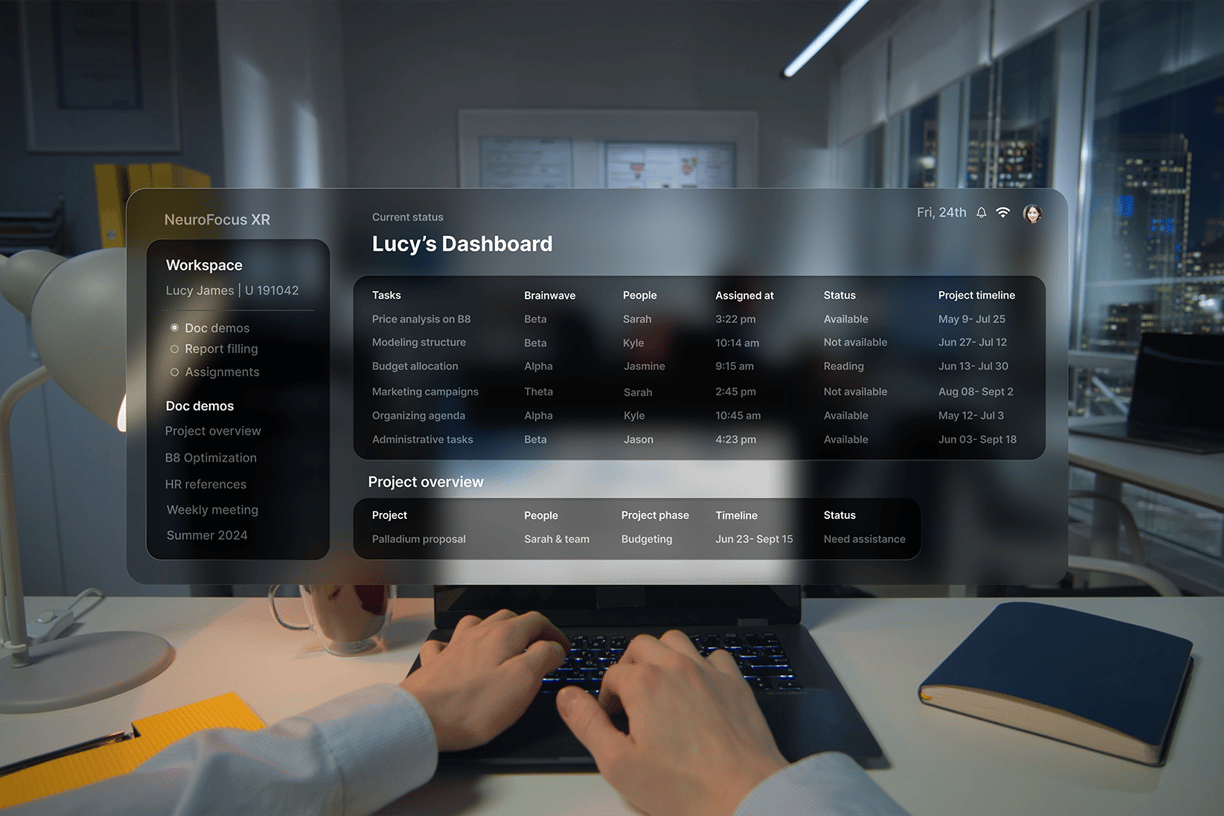

While designing high-fidelity designs, I took some insights from the other similar workplace platforms like Monday for example. This helped to understood which tools and parameters were the most used and how efficient they were.

Keeping the design guidelines in check, I made sure the screens were not actually blocking to much of the person's view because they were supposed to aid the user in working and obstruct them.

Experimenting with how much of the color gradient based on brainwave patterns should be visible was a challenging task because a little bit of less and the user would not see it their semi-focused vision angle and if a little more, it would interfere with the user's attention and loose it's purpose.

The dashboard showcases a different panel to know which person was at optimum performance in which brainwave state. This way, we could assign tasks respectively so that the person is not burdened with unnecessary workload and work friction.

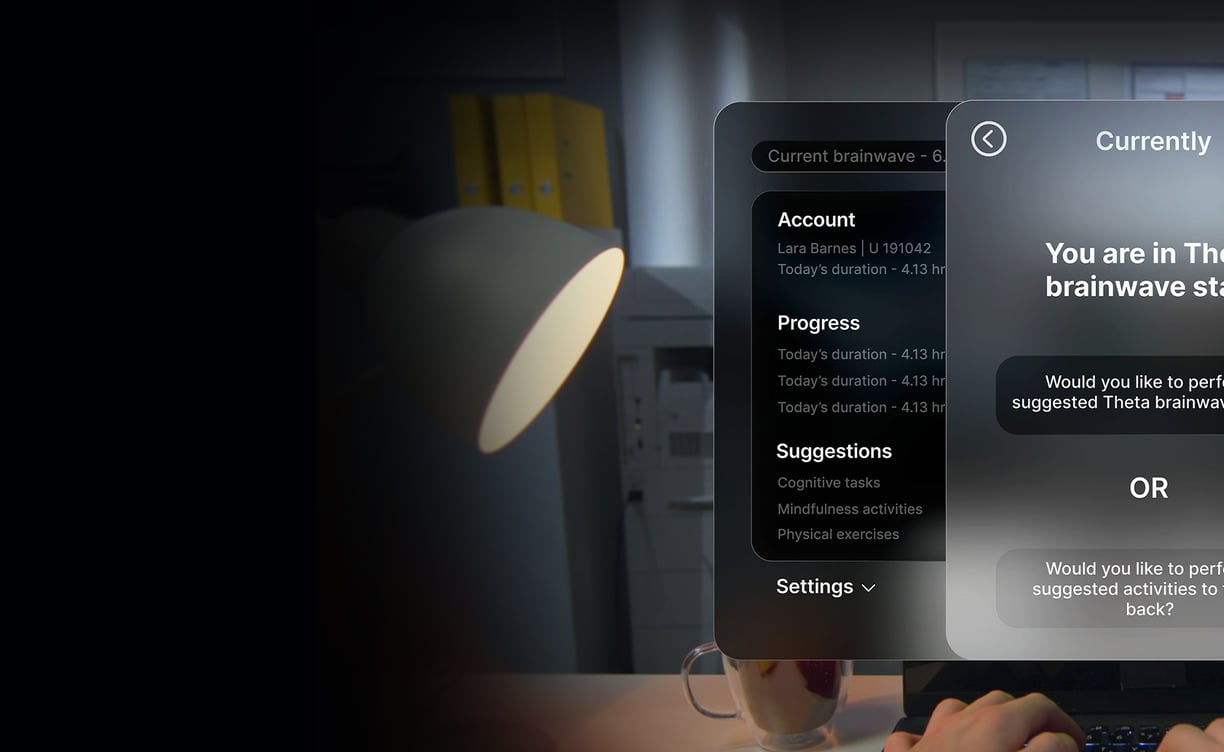

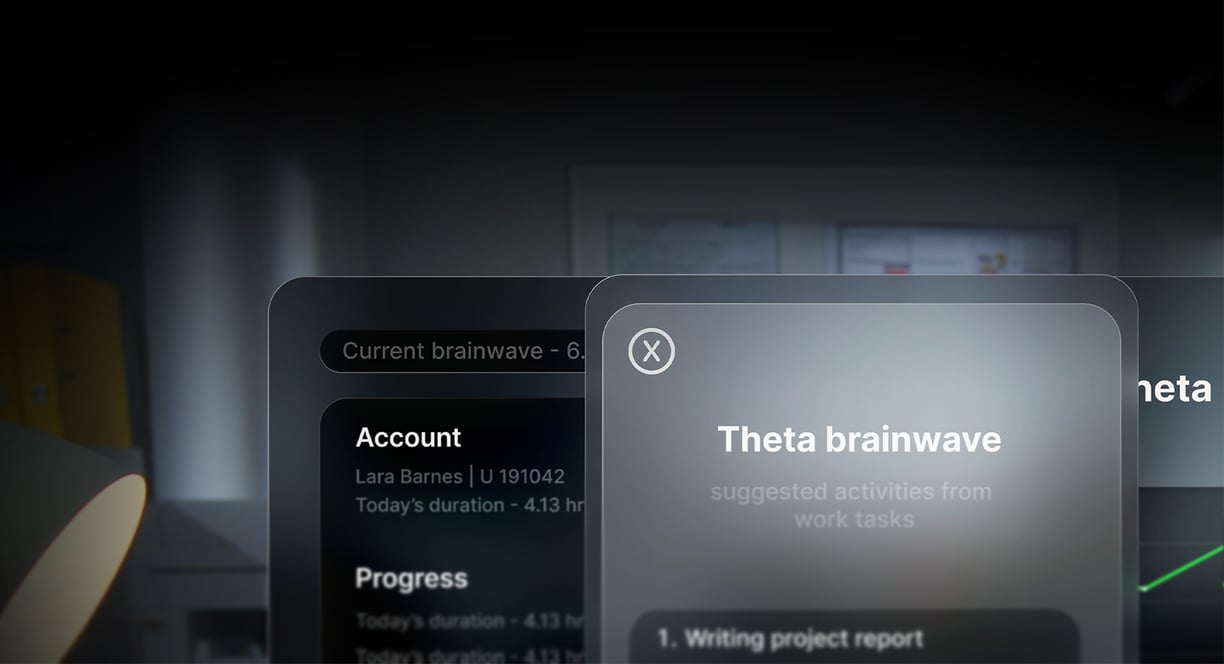

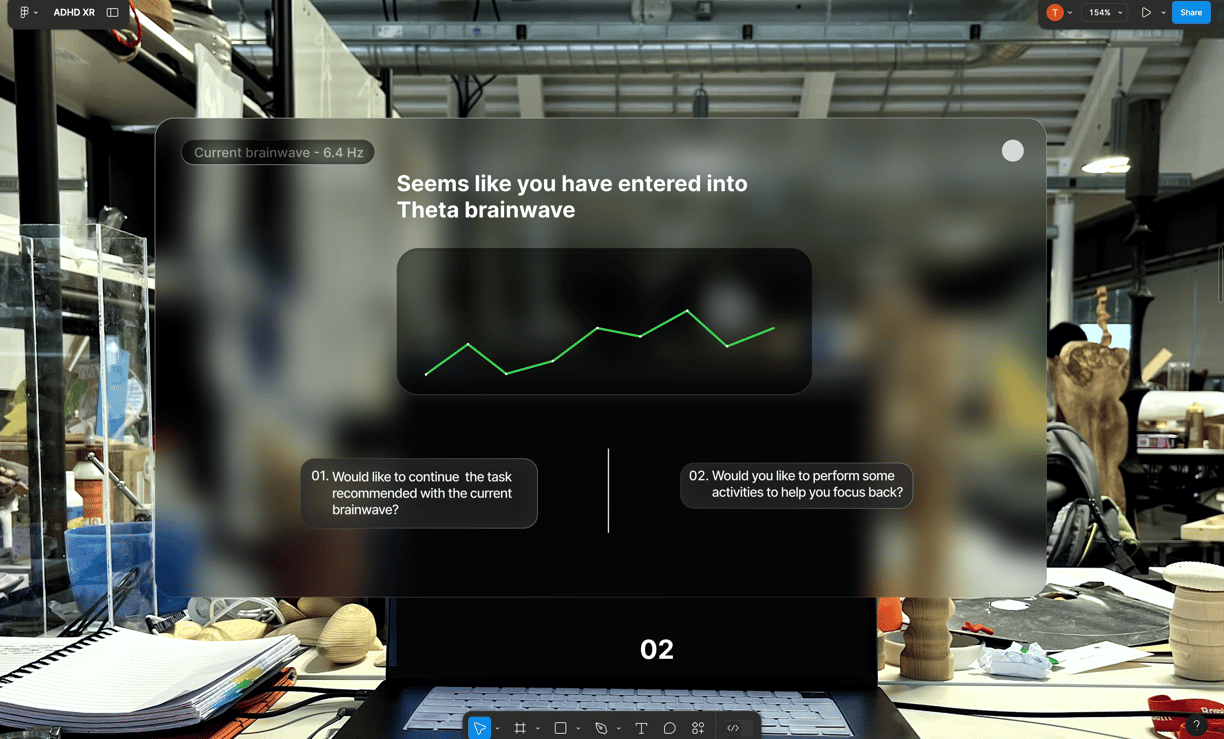

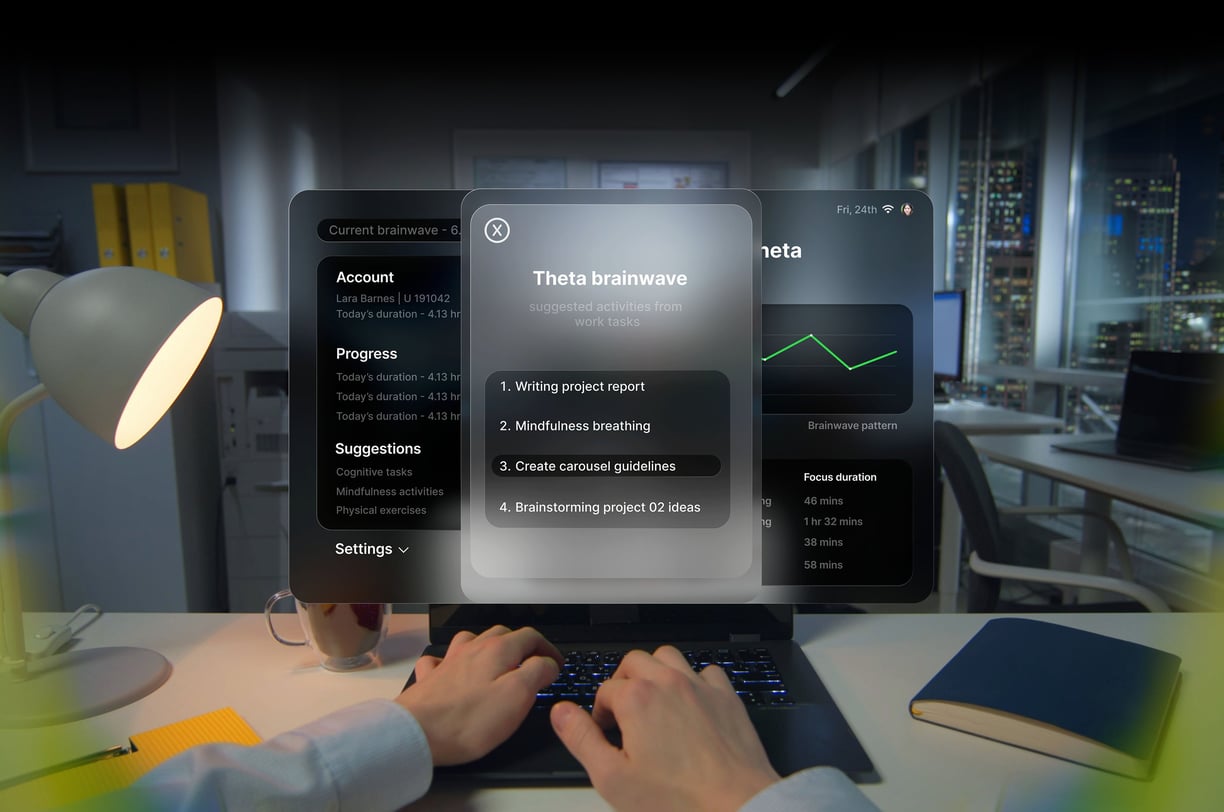

Initial User Interface

Next I created the user interface that would let the user know about their brainwave pattern. Recognizing this, it would then suggest them appropriate tasks that were suitable for their current state. My aim was to keep the screens at minimum and be displayed when they are absolutely required, so that people can focus on their work.

Moreover, I understand that there would be ethical and privacy concerns about other people's brainwave states but I am sure we could come up with a solution that benefits mental health, the employee and the employer.

Brainwaves & Visual Stimuli

The visual stimuli is placed in the semi-focused area of the human vision angle so that the user can focus on their task but simultaneously be aware of their brainwaves patterns.

This way users can get live feedback of when they get distracted or zoned out with the help of changing color gradients on the side of their vision, indicating them to switch to a different task suitable for their current brainwave state or take a break

+

Brainwave patterns

Semi-focused vision placement

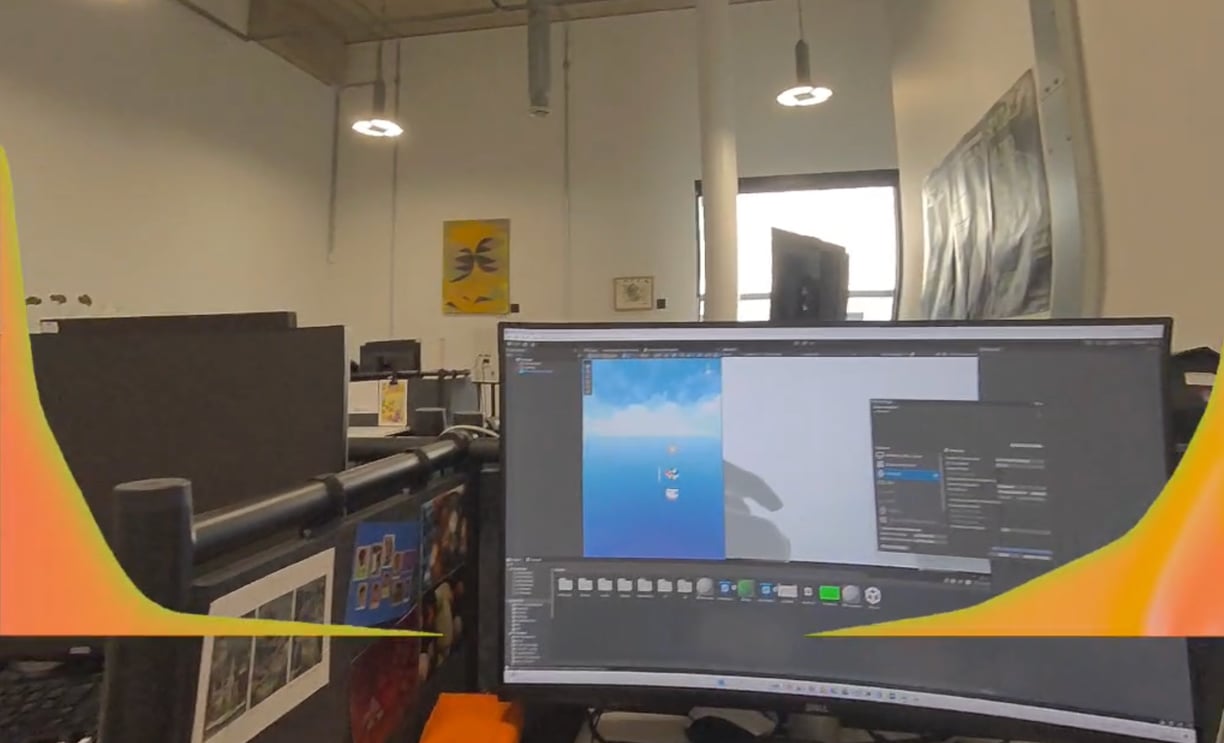

Final working prototype that interacts with biometric sensor to display colors according to the brainwave states of the user making it optimized individually for them in Mixed Reality.

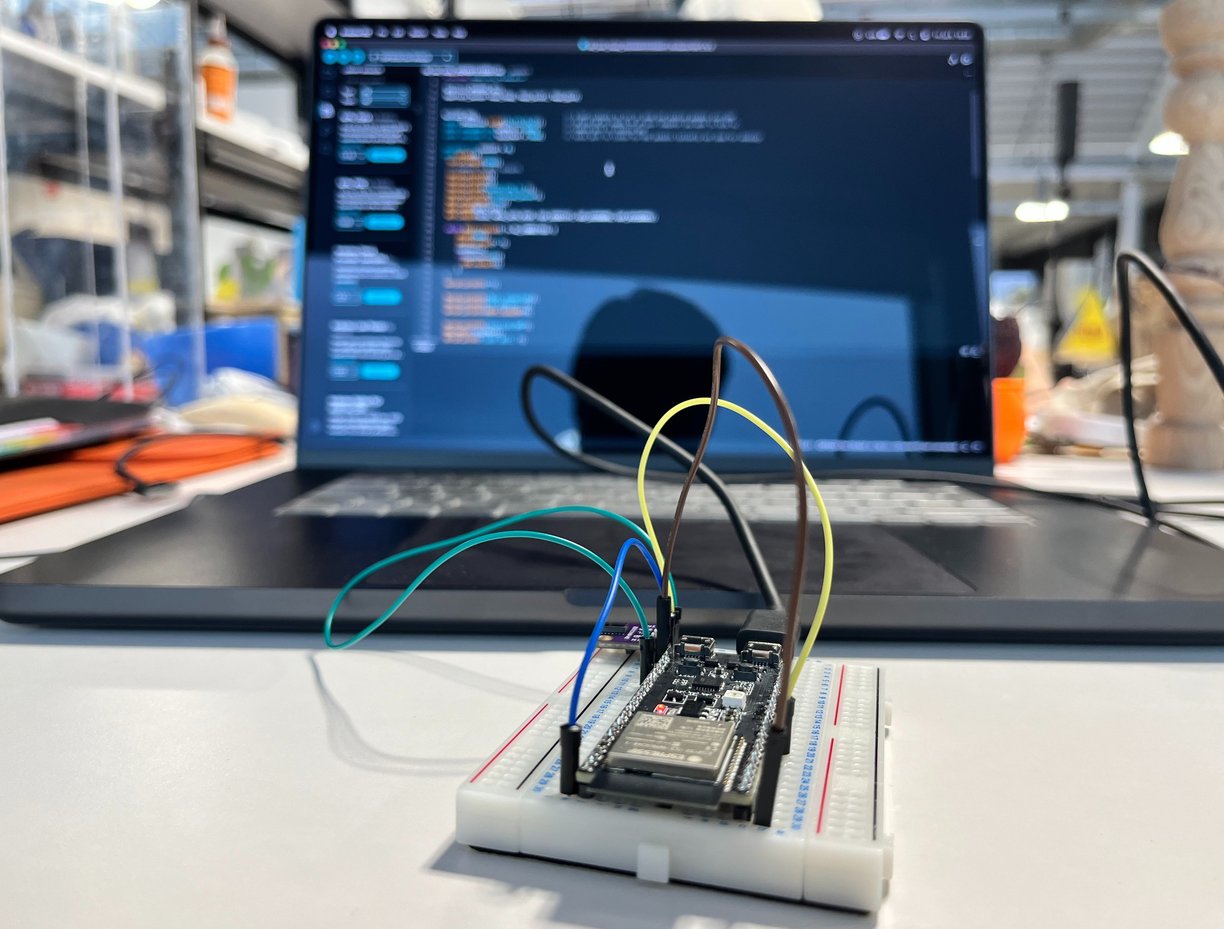

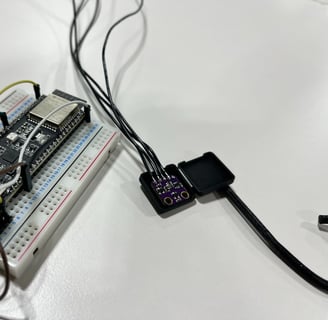

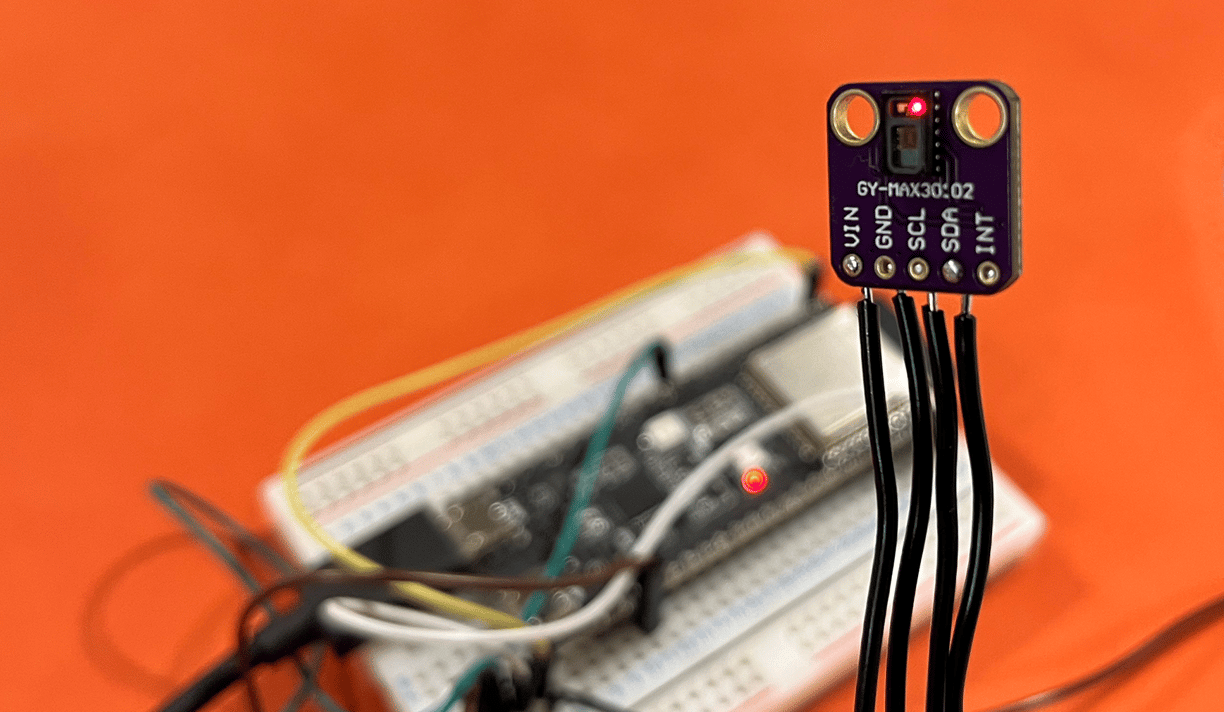

The most challenging part of the project was to configure the sensors to interact with the color in real-time. This had a lot of hardware, software, coding issues to deal with while developing the project. The sensor had to be also soldered so that it completes the connection perfectly without any issues.

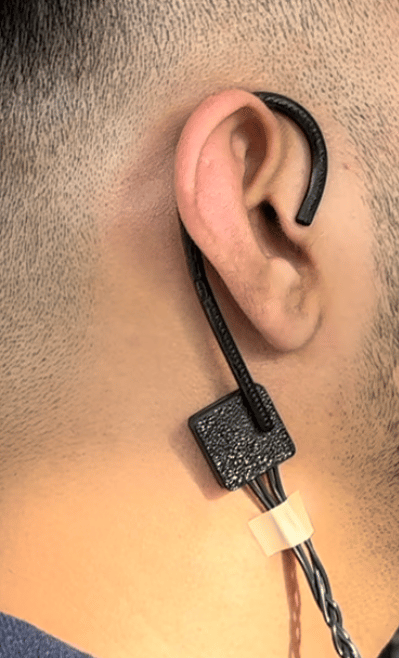

I also 3d printed a small housing for the sensor that could be attached to posterior auricular nerve so that we could track the heart rate and calculate the brain waves.

Working Prototype

Final working prototype that interacts with biometric sensor to display colors according to the brainwave states of the user making it optimized individually for them in Mixed Reality.

The journey was not at all smooth which I had anticipated looking at the complicated dynamics of many different aspects working together. Since brainwave sensors were expensive and out of market reach due to ethical reasons, I used a heart-rate sensor to monitor the heart beats and then calculate the brainwave state in which the person was.

This way I could prototype my design and plus understand if the components were working well together.

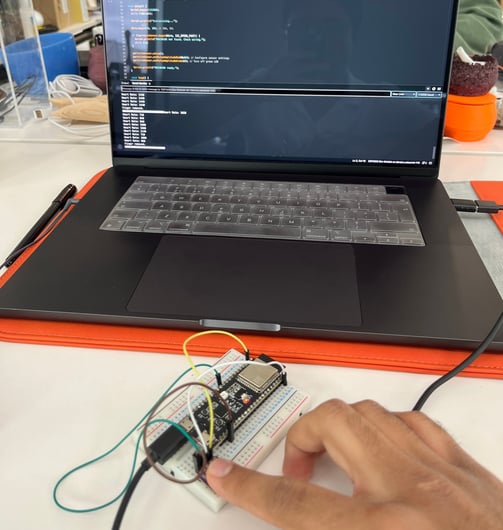

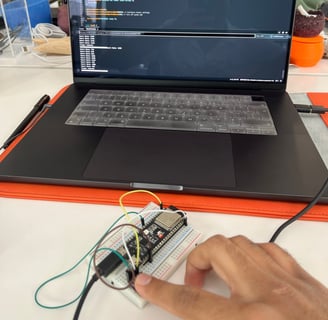

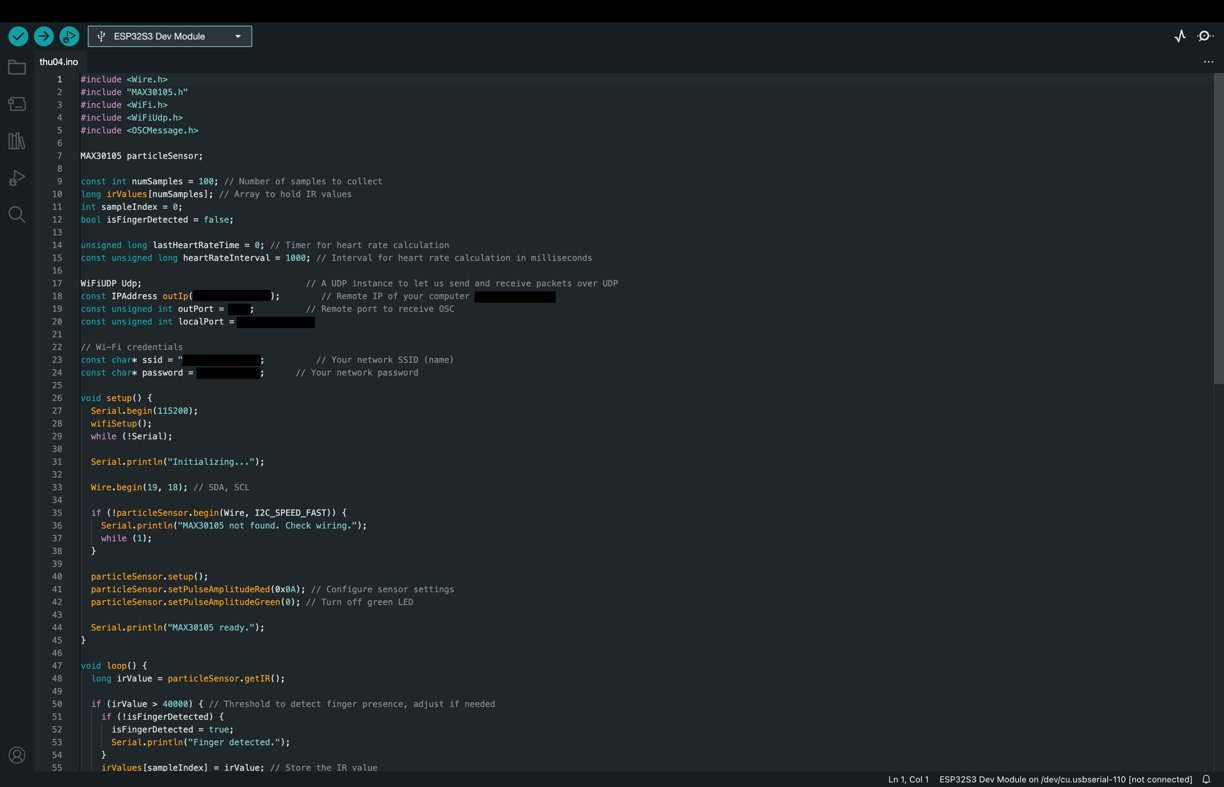

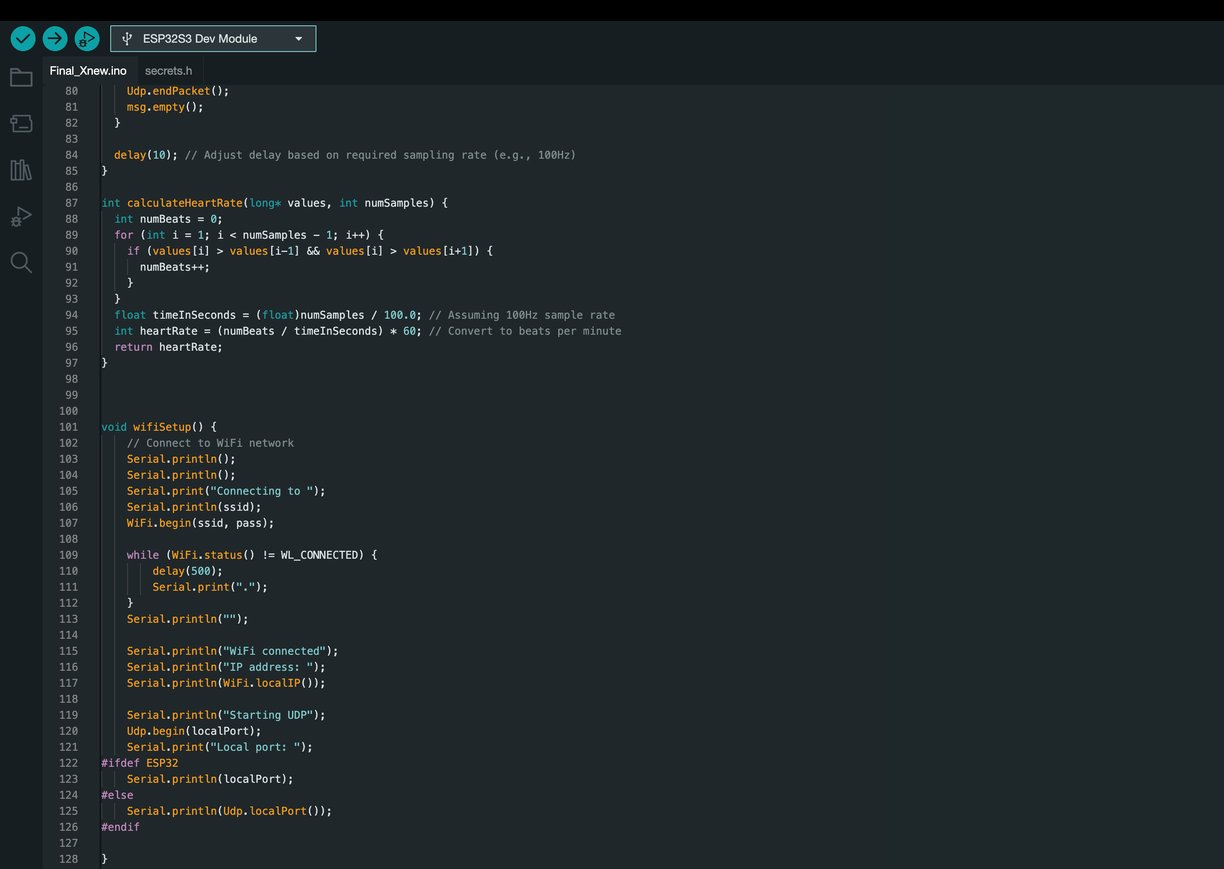

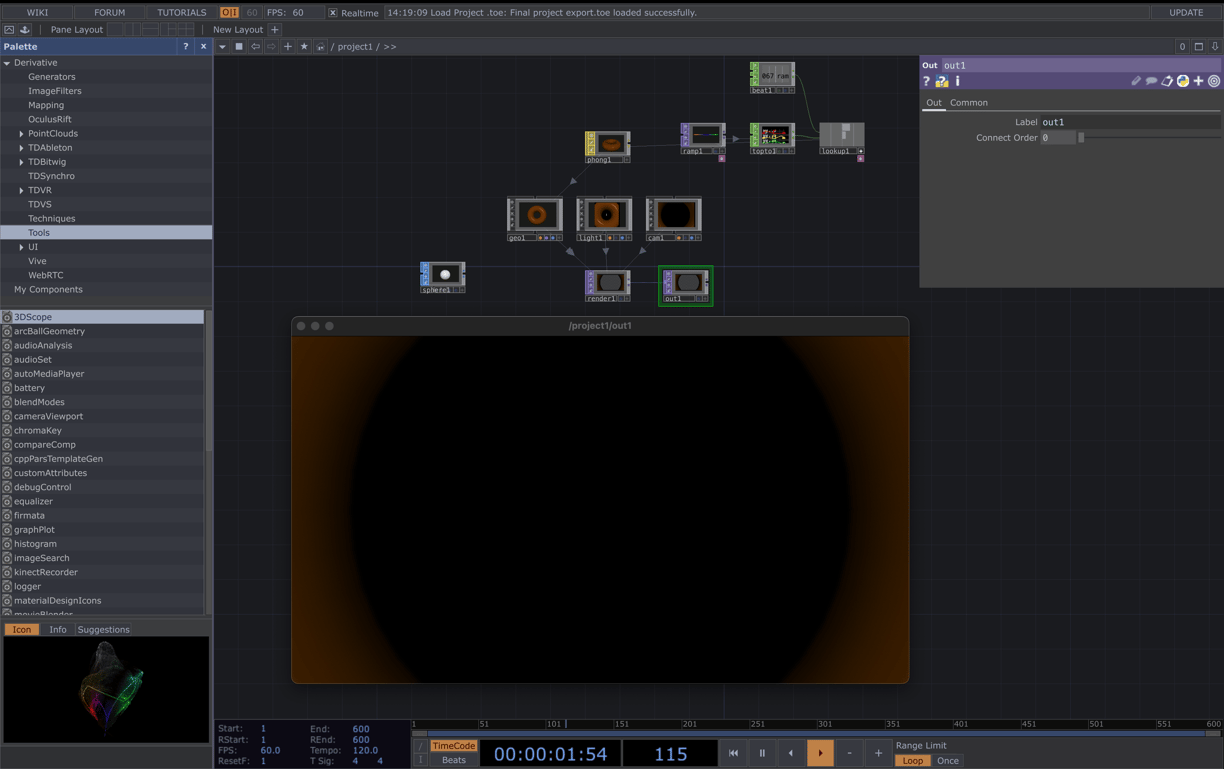

Here I used an ESP-32, Max 30102 heart rate sensor, Arduino IDE and Touch Designer for the color gradient.

After 8 coding failures on new files every time, and numerous visits to White City campus’ creative coding consultations, finally I was able to match the code that worked specifically for my sensor and fulfilled the goal that I wanted.

Technician Kyle helped me with understanding and building the code and Hamid from Robotics research lab helped me soldering the connection together.

Hence, shoutout to Kyle and Hamid (RCA's technicians) :)

Somehow the code was not able to connect to the school’s Eduroam wifi an also not to my personal iphone hotspot so I had to use my friend’s android hot spot to transmit the data via wifi from the ESP 32 to Touch Designer software.

You find the prototyping videos at the link.

Finally it was configured the right way to wirelessly transmit the heart readings to Touch Designer and from there to the user's Mixed Reality device. I would like to admit this was one of the most crucial moments during the project.

Coding internals

With a little help of ChatGPT, technicians and my knowledge, together we developed the code from scratch.

Visual Interaction

Now since the connection was established, it was time to put it altogether and test it on users. The sensor was coded to be ON all the time to continue reading user's heart beats and transmit wirelessly.

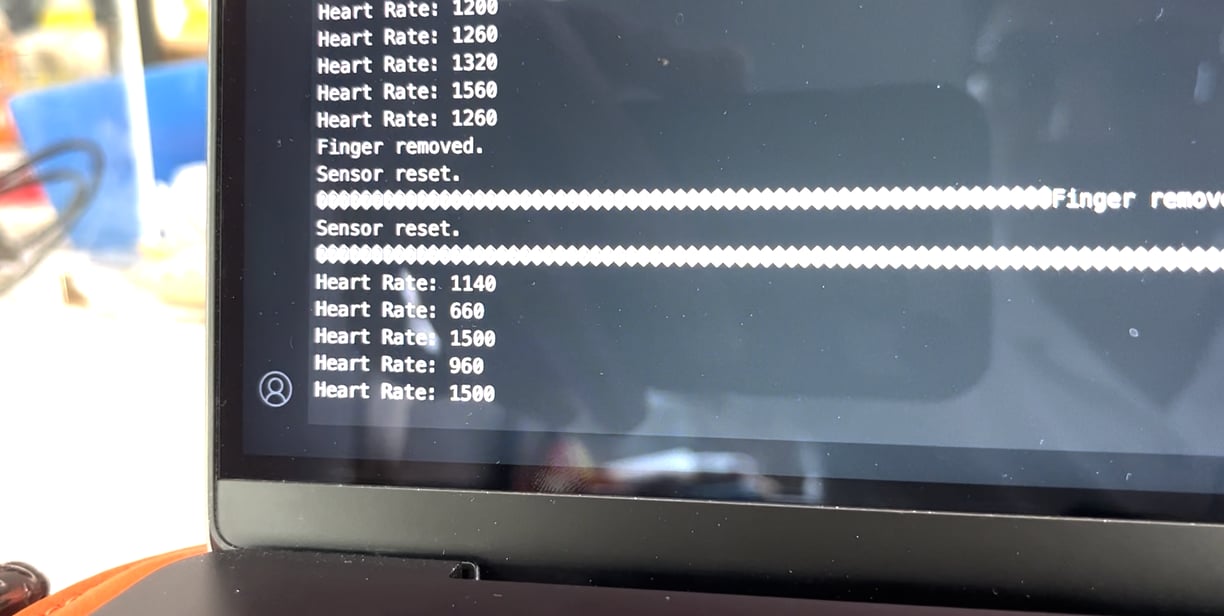

Successful heart rate reading being captured. The value is a complex calculation for the touch designer software to understand it easily and use the right color in gradient to inform the brainwave pattern.

Touch Designer's simpler node structure used for the color gradient. Based on your heartbeats it would change and transition into different colors depicting the change of your brainwaves.

Please find the prototyping videos at the link.

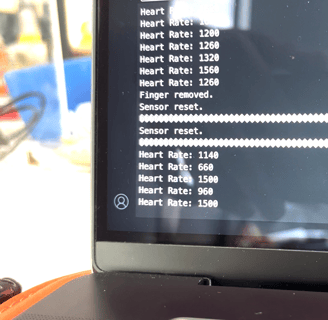

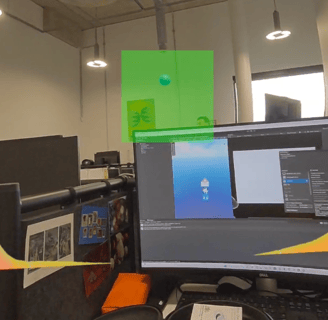

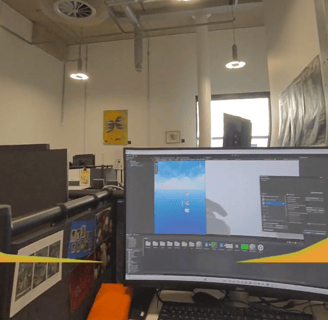

Prototyping Mixed Reality in Unity

I started prototyping in the Meta Quest 3 to understand the chromatherapy as well as how well do colors interact with the surroundings. Although the setup was a little smooth, there were a few failures on the path that I did not anticipate.

This is me prototyping in the RCA XR Lab.

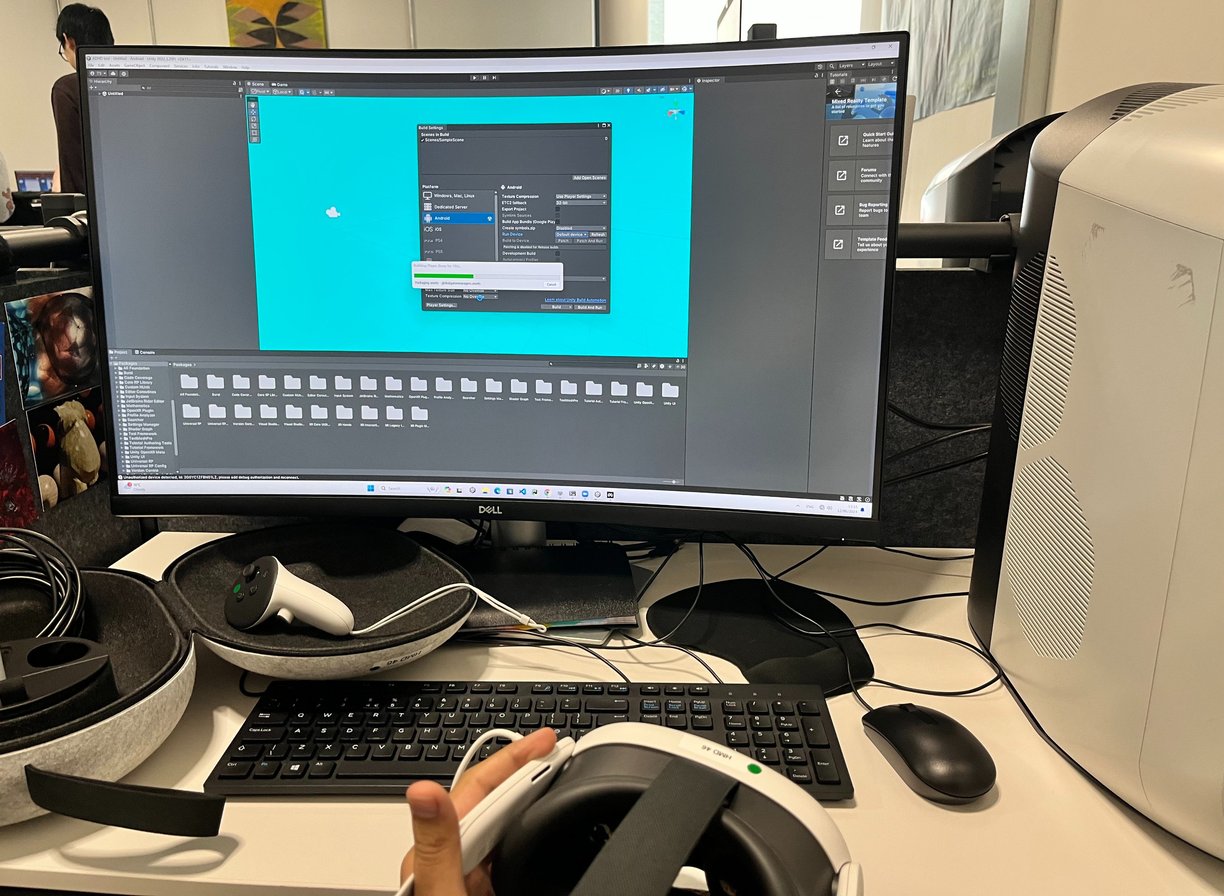

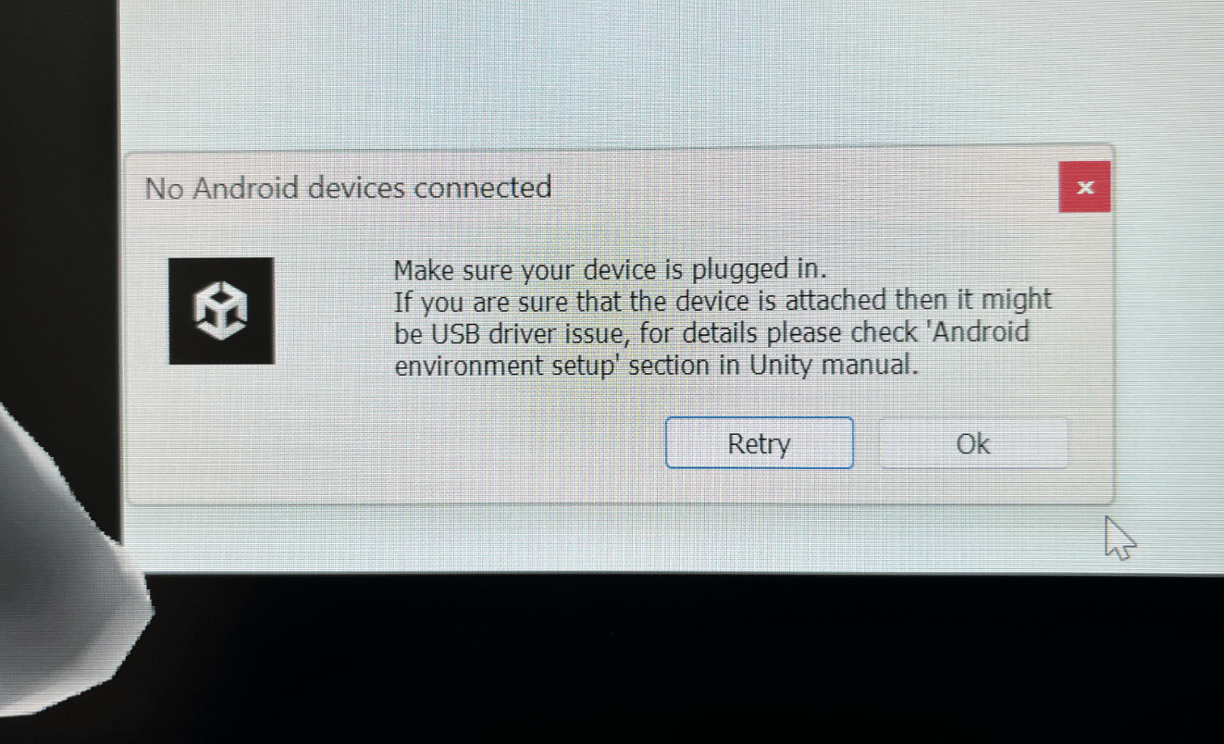

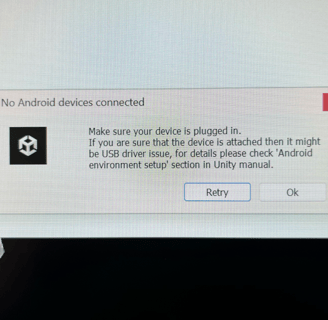

Failure #1

Even though the headset was fairly new, driver issues still made a significant halt in the overall progress of the project.

After the entire project development and setup in unity, the final test run failed multiple times due to an unrecognized technical error in the headset on the development phase. The solution to that turned to completely wipe out the headset via factory reset and try again.

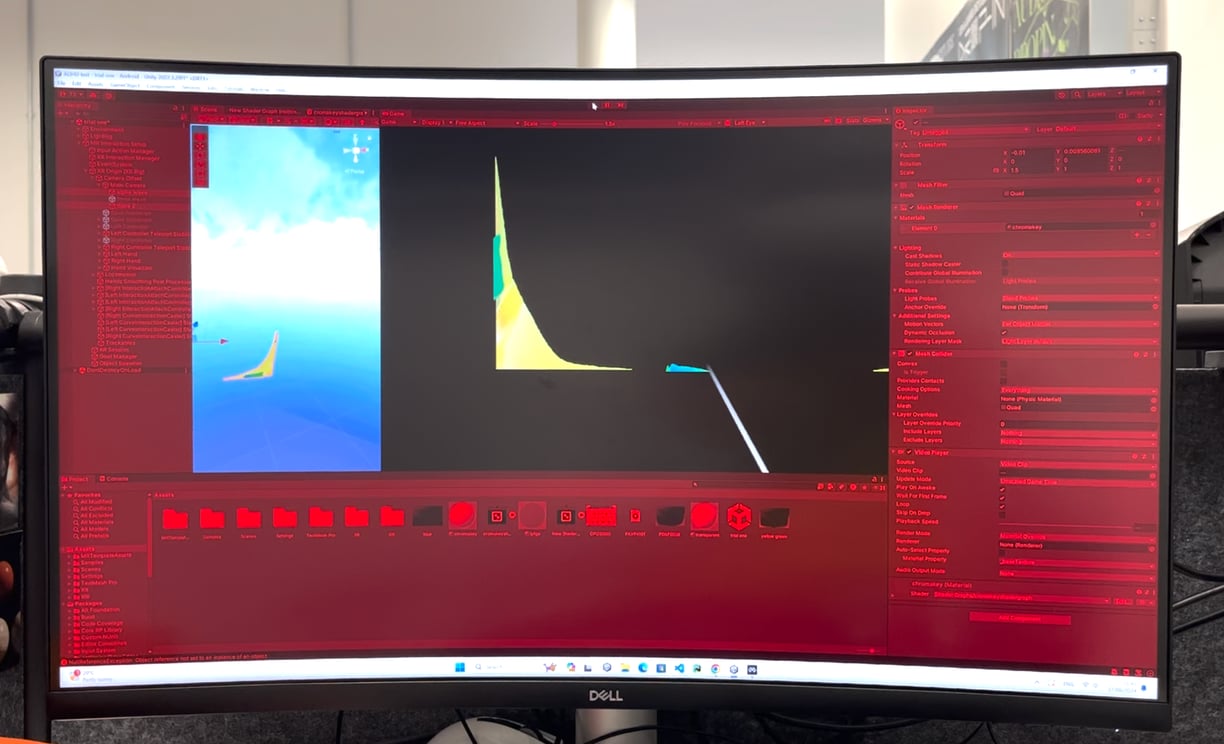

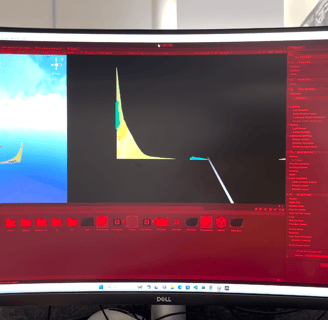

Failure #2

This was after the Apk was installed on the device. I could preview it on Unity's main screen but the gradient kept failing to load at the right time.

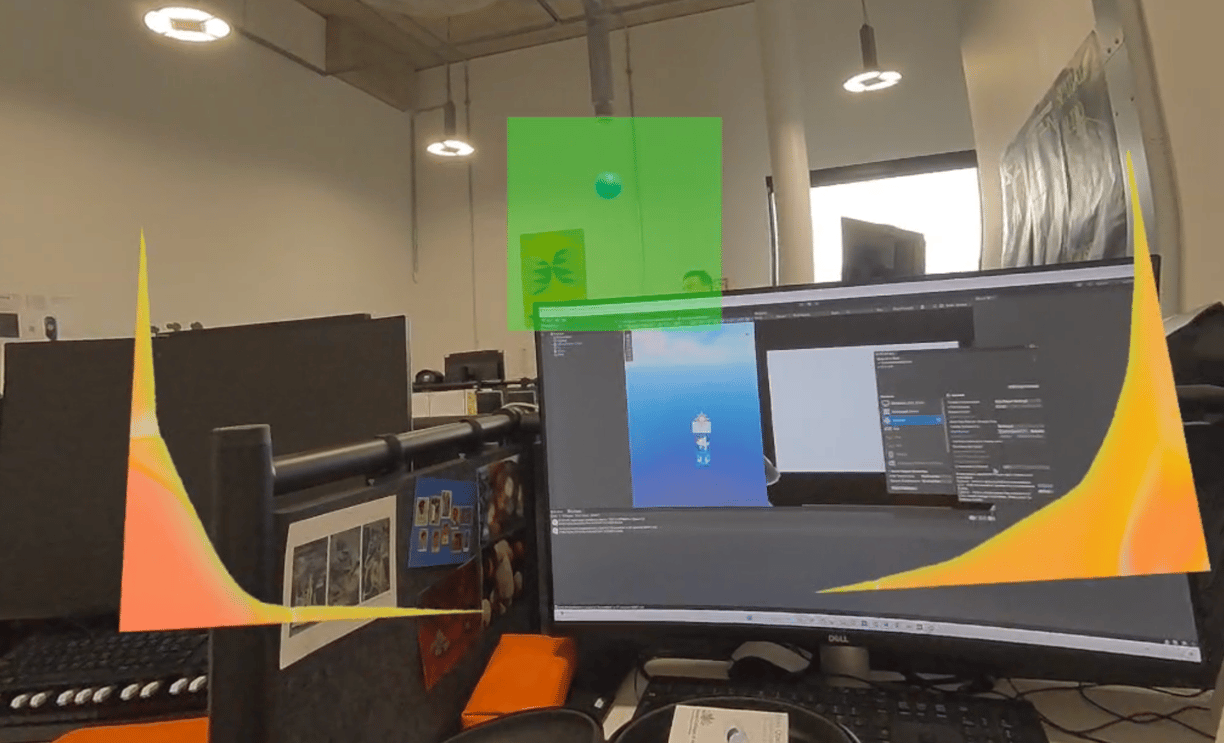

Successful trial #1

The first successful trial was done with references to a green quad and a sphere in space to understand the head tracking and direction movements of the person.

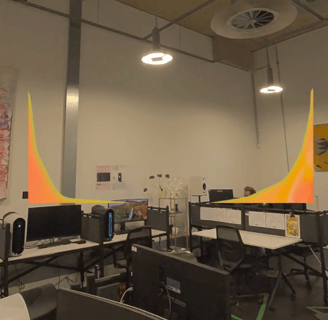

Successful trial #2

This time the screen was prototyped to move with head, always keeping it front and designed to interact according to brainwave patterns.

Proper placement on the window and experimenting with different ratios. This time the color gradient moved with the person moving their head, so it worked as a heads-up display to inform the user of their brainwave patterns.

First potential viewpoint perspective of informing Alpha brainwave states to the users while reading a research article.

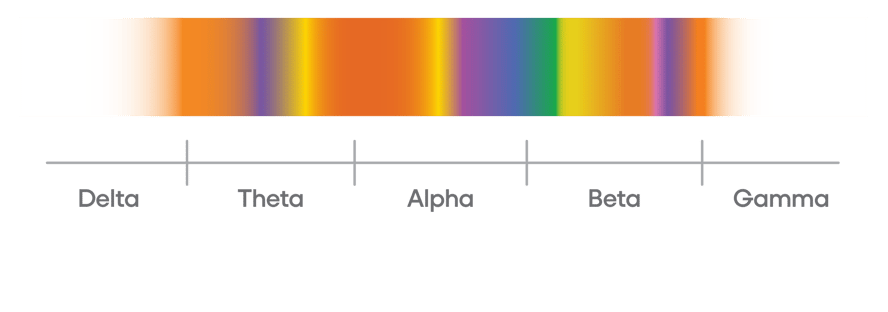

To understand the classifications of brainwaves and related colors, I also designed a brainwave color scale for users to distinguish their brainwave pattern with identifying frequent colors appearing on their semi-focused vision angle.

Brainwave Color Scale

As I explained previously, colors and our emotions interact dynamically with the surroundings. Leveraging this, the therapy turned out to be more positive and individual-based as I had aimed at the starting of the project.

The placement of the color gradient is well-executed, as it stays out of the user’s focused vision while still allowing them to notice the displayed color.

However, when it comes to testing this setup in a work environment, the current headset is too bulky and heavy for people to wear comfortably while working.

Although the pass-through quality has improved, it still falls short of allowing users to see clearly enough through the headset to read from their laptop screens and perform tasks effectively.

User testing & the future

I believe that as Mixed Reality technology advances in the coming years, more compact and lightweight glasses will become available.

At that point, we can conduct more rigorous user testing in real-world conditions and refine the design.

Design Intelligence Award 2024

NeuroFocus XR also won the Design Intelligence Award 2024 under Honorable Mention category. This was a collective success of everyone involved in the project from start to end who persevered through difficulties only to see this success. I want to dedicate this Award to them as a Thank you gesture.